Navigating the Linguistic Universe with LLaMA2: A Comprehensive Guide to Meta's AI Masterpiece

Explore LLaMA2's breakthroughs in AI: multilingual capabilities, efficient data processing, and open-source innovation for business transformation.

Embark on an adventure through the vibrant cosmos of Meta AI's LLaMA (Large Language Model Meta AI), a trailblazer in the realm of large language models (LLMs). LLaMA isn't just efficient – it's the cherry on top of the AI cake, boasting not only open-source accessibility but also the green light for commercial use. Imagine a tool so powerful yet so within reach, ready to revolutionise how we think about language and AI.

In the fascinating world of LLaMA, one marvel simply wasn't enough. Enter the original LLaMA, making its grand entrance in February 2022, and its more muscular kin, LLaMA 2, bursting onto the scene in July 2023. It's a tale of two giants, each available in an array of sizes to fit every AI enthusiast's needs. We will focus on LLaMA2 in this article.

Welcome to our explorative series on Meta's LLaMA2, where we unravel the layers of one of the most advanced large language models in the AI sphere. This article marks the beginning of a journey that will not only illuminate the inner workings of LLaMA2 but also guide you through hands-on experiences with text generation and bespoke fine-tuning for specialised tasks. So, buckle up as we embark on this exciting adventure, one article at a time!

- Broadening Business Horizons: LLaMA2's prowess in multilingual language processing and generation empowers businesses to innovate in customer engagement and market analysis, offering a significant edge in global market connectivity and diversity.

- Maximising Efficiency, Minimising Risk: Leveraging LLaMA2's efficient Grouped Query Attention, businesses can swiftly handle extensive language data, enhancing decision-making while ensuring safe, professional AI-generated content to protect their brand reputation.

- Capitalising on Open-Source AI: LLaMA2's open-source availability democratises access to advanced AI, allowing businesses of all sizes to innovate and compete in the AI-driven market landscape without the burden of high costs.

Why LLaMA 2? Embracing the Future of Language Modeling

Optimised for Inference: A Speedster in Thought

Picture this: a language model not just brimming with knowledge, but one that's turbocharged for efficiency. Enter LLaMA 2, a model that doesn't just dream up words, but does it with the grace of a gazelle and the speed of a cheetah. Thanks to Grouped Query Attention (GQA), LLaMA 2 slices through computational demands like a hot knife through butter, ensuring that even the most complex of linguistic puzzles are solved with surprising alacrity.

Open Source: The Gift That Keeps on Giving

Now, imagine if this linguistic wizardry wasn't locked away in some ivory tower, but was instead a treasure available for all to explore. LLaMA 2 isn't just a marvel of technology; it's a beacon of accessibility. Open source and ready for commercial use, it's like having the keys to a language laboratory, inviting innovators, thinkers, and dreamers to tinker, transform, and transcend boundaries.

Safety First: The Conscientious Guardian

But what good is power without responsibility? The wizards behind LLaMA 2 didn't just build a language giant; they nurtured a considerate one. Through the lenses of Supervised Fine Tuning and Reinforcement Learning with Human Feedback (RLHF), along with the innovative use of reward modelling and Ghost Attention, LLaMA 2 stands as a sentinel against the dark alleys of toxic content. This model doesn't just speak; it thinks before it speaks, ensuring that its words are not just impactful, but also imbued with a sense of safety and respect.

Whether you're a developer looking to build the next big AI application, a researcher in pursuit of linguistic understanding, or just a curious mind, LLaMA 2 invites you to a world where words are not just words, but keys to unlocking infinite possibilities.

Deep Dive into LLaMA2: A Journey into AI's Linguistic Mastery

Step aside, ordinary language models, for LLaMA2 is here to reign supreme. This linguistic behemoth, trained on an astronomical 2 trillion tokens, is a veritable galaxy of words and symbols. Think of each token as a star, and LLaMA2's training data becomes its own cosmos of knowledge.

LLaMA2's multilingual prowess is equally impressive, effortlessly conversing in 20 languages. It's the polyglot of the AI world, a true master of tongues. And to cater to your diverse needs, LLaMA2 comes in three sizes: 7B, 13B, and a jaw-dropping 70B. Plus, you have two flavours to choose from: the original LLaMA2 and its chat-optimised sibling, LLaMA2-chat. Additionally, compared to LLaMA1, LLaMA2 has 4096 context window.

At its heart, LLaMA2 is powered by the venerable transformer architecture, the undisputed king of language modelling since 2018. This autoregressive transformer isn't just a bystander; it's a trendsetter, adding its own unique flair to the mix. Let's delve into these enhancements:

Pre-normalization, as adopted in advanced models like GPT-3, represents a subtle yet impactful tweak in the architecture of Transformer models, particularly concerning how they handle normalisation. Traditionally, layer normalisation in Transformers is applied to the output of each sub-layer within a Transformer block – a process known as post-normalization. However, pre-normalization flips this approach, applying normalisation to the input of each sub-layer instead. In practice, this means that before the data passes through either the self-attention mechanism or the feed-forward neural network within a Transformer block, it first undergoes normalisation. This approach is akin to preparing and stabilising the data before it undergoes the complex transformations within each layer. The benefits of pre-normalization are particularly pronounced in deeper models like GPT-3. It acts much like a seasoned chef carefully prepping ingredients before cooking, ensuring consistent quality and preventing any element from overwhelming the dish. By standardising the inputs right at the outset, pre-normalization aids in maintaining a balance in the flow of gradients throughout the network. This is crucial for avoiding issues like exploding or vanishing gradients, which can be detrimental in deep learning models. Essentially, pre-normalization contributes to a more stable and efficient training process, especially as models grow in size and complexity, ensuring that each layer receives data in a form that is conducive to effective learning and pattern recognition.

Grouped Query Attention (GQA): Traditionally, each head in the transformer model operates independently, wielding its own set of keys and values for data processing. GQA, however, ushers in an era of collaboration among these heads. In GQA's framework, heads are grouped into clusters, with each cluster sharing a single set of keys and values. For instance, in a model with 10 heads, grouping them into 2 clusters means instead of having 10 separate sets of key and value matrices, we now have only 2 sets of key and value matrices for the 2 clusters. Within each cluster, all 5 heads utilise the same key and value resources for data interpretation.This shared approach is not just a mere structural change; it's a strategic move towards greater efficiency. By pooling resources, LLaMA2's GQA system significantly reduces the amount of computational power and memory required. The beauty of GQA lies in its balance. It strides the middle path, offering more resource efficiency than the standard Multi-Head Attention (MHA) – where each head works in isolation – yet preserving more diversity and depth than a setup where all heads share the same resources Multi Query Attention (MQA).

Byte Pair Encoding (BPE) is a clever technique in natural language processing that strikes a perfect balance between managing a vast vocabulary and capturing the nuances of language. Imagine you have the word "helpful" and "unhelpful" in your dataset. Instead of treating these as completely separate entities, BPE breaks them down into smaller, shared components like "help", "ful", and "un". Initially, BPE treats each character as a separate unit. Then, iteratively, it merges the most frequent pairs of characters or sequences. For instance, "h" and "e" might first combine to "he", then "hel", and so on, until "helpful" and "unhelpful" are efficiently broken down into common subwords.

This method shines because languages inherently reuse parts of words (like prefixes, roots, and suffixes) across different contexts. By identifying and utilising these common subwords, BPE allows a language model to handle an extensive array of words without needing to know each one individually. It's especially handy for new or rare words, which the model can decipher by breaking them into known subwords. Thus, BPE provides a compact yet comprehensive toolkit for language models, enabling them to grasp the intricacies of language with a manageable vocabulary size, making it an ingenious solution to a complex problem.

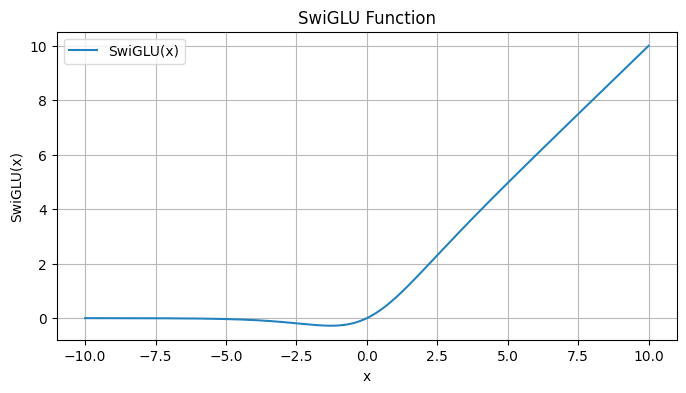

SwiGLU Activation Function (SwiGLU), short for Sigmoid-Weighted Linear Unit, represents a nuanced evolution in neural network activation functions, designed to enhance the processing capability of deep learning models. At its core, SwiGLU operates on a simple yet powerful mathematical principle: it multiplies a linear transformation of the input data with a sigmoid-activated version of the same data. Mathematically, this can be expressed as

$output = (Wx + b) ⊙ sigmoid(W'x + b')$

where $W$ and $W'$ are weight matrices, $b$ and $b'$ are biases, $x$ is the input, and $⊙$ denotes element-wise multiplication.

This formula essentially combines a straightforward linear transformation ($Wx + b$) with a gating mechanism ($sigmoid(W'x + b')$). The sigmoid function here acts as a filter, deciding which parts of the input are relevant and should be amplified, and which parts should be dampened. This selective amplification imbues SwiGLU with its intuitive appeal; it's akin to a person in a noisy room focusing on a single conversation while tuning out irrelevant background noise. By emphasising important features of the input while de-emphasizing others, SwiGLU enables neural networks to learn more effectively, honing in on crucial patterns and correlations in complex data sets, thereby enhancing the model's overall performance and efficiency.

Rotary Positional Embedding (RoPE) stands out as a transformative approach in the realm of natural language processing, particularly within the sophisticated architecture of Transformer models. At its heart, RoPE addresses a fundamental challenge in language understanding: recognizing the significance of the position of words in a sentence. Unlike traditional methods that add positional information as a separate layer, RoPE ingeniously integrates this information directly into the token embeddings through a rotational mechanism. This technique involves mathematically rotating the embedding of each word or token in a sequence, with the angle of rotation being unique for each position. This rotation effectively encodes the position of a word in a way that is inherently compatible with the self-attention mechanism of Transformers. The brilliance of RoPE lies in its ability to maintain the relative positional relationships between words, allowing the model to discern not only the presence of specific words but also their contextual relevance based on their positioning. For instance, in processing a phrase, RoPE helps the model to understand the nuanced difference in meaning when the same words appear in different orders. This integration of positional context directly into the token processing enhances the efficiency of the Transformer's attention mechanism, leading to more accurate and contextually aware language models. RoPE, therefore, represents a significant step forward in developing AI systems that can understand and interpret human language with a higher degree of sophistication and nuance.

Conclusion

In summary, LLaMA2 stands as a testament to Meta AI's commitment to innovation, efficiency, and ethical AI development. With its advanced features and open-source availability, it is poised to revolutionise the field of AI and NLP. As we conclude this chapter, remember that our journey with LLaMA2 is just beginning. Stay tuned for our next instalment, where we'll dive hands-on into the realms of text generation and fine-tune LLaMA2 for specialised tasks. Join us as we continue to explore the limitless potentials of LLaMA2, unlocking new possibilities and shaping the future of AI communication.

Contributors

Amita Kapoor: Author, Research & Code Development

Narotam Singh: Copy Editor, Design & Digital Management