Harnessing the Power of LLMs with Responsible Guardrails

Explore the essential role of AI guardrails in business, the versatility of NeMo, and the power of custom-designed solutions for LLMs.

In the fast-evolving landscape of business technology, Large Language Models (LLMs) are becoming the new powerhouses. Their ability to understand, interpret, and generate human-like text is revolutionising how businesses operate. The surge in AI adoption is evident in the staggering $50 billion invested in Generative AI startups and AI based startups in 2023 alone, as reported by Crunchbase. This marks a significant shift towards embracing AI tools for enhancing business operations.

However, as the old adage goes, "With great power comes great responsibility." Employing LLMs is not just about harnessing their capabilities but also about ensuring they are used responsibly. The cost of deploying these models can be high, not just financially but also in terms of potential risks to reputation and compliance. Misuse or deviation from intended purposes could lead to challenges such as biassed outputs, privacy breaches, or unreliable content generation, raising critical questions: How can businesses harness the power of LLMs while mitigating these risks? What measures or safeguards are essential to ensure ethical and compliant AI practices?

This is where the concept of 'Guardrails' in AI becomes paramount. In the upcoming sections, we will explore various guardrail solutions that aim to address these challenges, offering insights into how businesses can securely and effectively integrate LLMs into their operations. We'll delve into the specifics of several guardrail options, highlighting their features and capabilities, and further focus on a particularly innovative solution, demonstrating our expertise and readiness to guide businesses through the evolving realm of AI.

Key Takeaways:

- Essential Role of Guardrails in LLM-Based Businesses: The integration of guardrails is essential when building businesses around Large Language Models (LLMs). This is crucial for maintaining ethical standards, factual accuracy, and regulatory compliance.

- Diverse Guardrail Solutions: The article highlights the wide range of guardrail solutions available, both open-source and proprietary. Among them, NeMo Guardrails by NVIDIA stands out for its versatility and open-source accessibility, offering robust support for various LLMs.

- The Power of Custom-Designed Guardrails for Precision and Control: Custom-designed Tipz AI guardrails offer precise control over AI outputs, aligning closely with business goals and compliance, ensuring ethical standards are met across various applications beyond just LLMs.

Safeguarding AI: The Crucial Role of Guardrails in Business Applications of LLMs

Imagine driving on a high-speed highway without any safety barriers — it's risky and unpredictable. Similarly, in the world of artificial intelligence, operating Large Language Models (LLMs) without digital guardrails is akin to navigating this high-speed digital highway without necessary safeguards. This need for digital guardrails extends far beyond chatbot applications; it applies to any LLM-based application that interacts with users at any level. Just like safety barriers on a road prevent accidents and guide traffic, in the diverse landscape of AI, guardrails are crucial in a wide array of applications, ensuring safe, responsible, and effective technology use. Let's delve into why establishing these guardrails is essential in various AI applications, whether they are engaging directly with users or functioning in the background:

- Combating Misinformation and Bias with AI Guardrails: When using LLMs for tasks like content creation, information summarization, or even data analysis, there's a risk of propagating misinformation or biases inherent in the training data. Guardrails can help ensure that the output is not only factually accurate but also free from unintended biases.

- Ensuring Data Privacy and Security in AI: In applications involving personal or sensitive data, guardrails are crucial for ensuring that such information is not inadvertently leaked or misused. This is particularly important in sectors like healthcare, finance, and legal, where data privacy is paramount.

- Compliance with Legal and Ethical Standards: Various industries are governed by strict regulatory standards. For instance, in healthcare, there are regulations like HIPAA in the US, which require stringent handling of patient data. Guardrails ensure that AI applications remain compliant with such regulations.

- Content Moderation: In social media, publishing, and digital marketing, LLMs can be used to generate or moderate content. Guardrails are necessary to prevent the creation or spread of harmful content, such as hate speech, misinformation, or inappropriate material.

- Customization for Specific Use Cases: Different applications may require LLMs to function within specific parameters or domain constraints. Guardrails allow for the customization of AI outputs to suit particular industry needs or organisational goals.

- Enhancing User Interaction and Experience Through AI Guardrails: For AI applications that interact directly with users, such as virtual assistants or customer support tools, guardrails help in maintaining a consistent, appropriate, and helpful user experience.

The importance of implementing guardrails in AI transcends chatbot scenarios, encompassing every arena where Large Language Models (LLMs) are put to use. The adoption of these critical safeguards represents a forward-thinking approach to AI development. It's about ensuring that AI tools are not merely potent and efficient but are also anchored in safety, ethics, and compliance with prevailing standards and regulations. This commitment to responsible AI practice ensures that as technology advances, it does so with a keen eye on the broader implications for society and individuals alike. Now, let us delve deeper into the world of open-source guardrails, with a special focus on Nemo Guardrails by NVIDIA, to understand how they are shaping the future of responsible AI development.

NeMo Guardrails: NVIDIA's Cutting-Edge Contribution to AI Safety

NeMo Guardrails, developed by NVIDIA is an open source solution for introducing Guardrails to your LLM powered applications. It offers developers the agility to rapidly introduce new rules and seamlessly blend with tools like LangChain for fluid application development. What's more, it's designed as an async-first toolkit. This means its core mechanics are built on the Python async model, a crucial feature for the efficient handling of real-time operations in AI applications.

The toolkit's versatility is another feather in its cap. Whether it's OpenAI GPT-3.5, GPT-4, LLaMa-2, Falcon, Vicuna, or Mosaic, or your own custom LLM, NeMo Guardrails is compatible with a diverse array of LLMs. This broad compatibility paves the way for a multitude of applications across different LLM platforms.

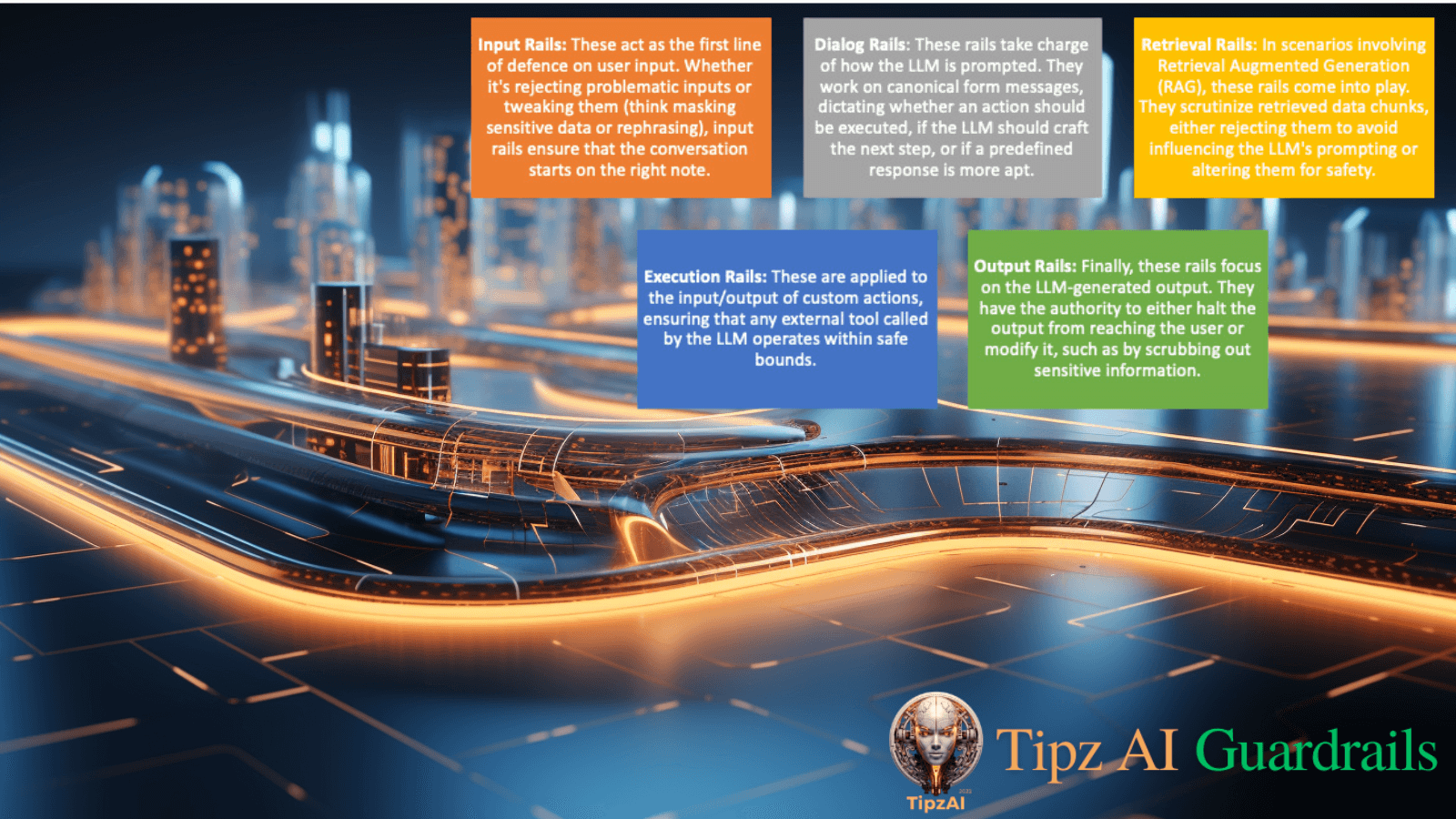

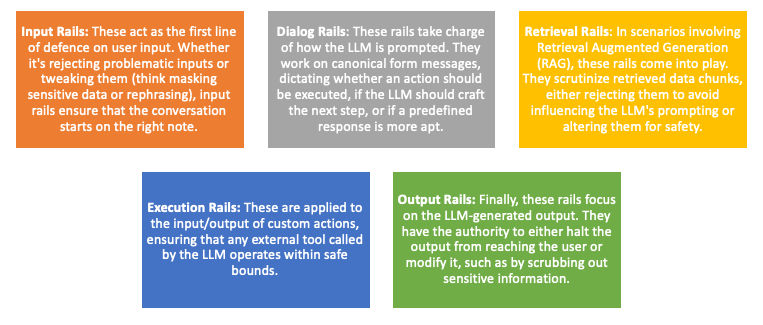

NeMo Guardrails introduces five main types of guardrails, each serving a unique purpose.

Figure: The five types of guardrails in Nemo Guardrail

Integrating NeMo Guardrails: A Practical Guide with Code Examples

NeMo Guardrails operates through two primary components: the config.yml file and guardrails specified in COLANG format. Here's how they orchestrate the magic behind the scenes:

1. config.yml – The Configuration Blueprint: This YAML file is the blueprint of your AI model's behaviour. It contains:

- General Options: Details like which LLMs to use, general instructions (akin to system prompts), sample conversations, active rails, and specific configuration options.

- Rails: Implementations of guardrails in COLANG format, typically found in a dedicated rails folder.

- Actions: Custom actions scripted in Python, located either in an actions.py module or an actions sub-package.

- Knowledge Base Documents: Utilised in Retrieval-Augmented Generation (RAG) scenarios, these documents are stored in a kb folder.

- Initialization Code: Custom Python code for additional initialization tasks, like registering new LLM types.

Consider this sample YAML configuration, designed for use with OpenAI's model. Remember to set your OpenAI API key in the environment variable

os['OPENAI_API_KEY']:

YAML_CONFIG = """

models:

- type: main

engine: openai

model: gpt-3.5-turbo-instruct

parameters:

temperature: 1.0

top_p: 0

top_k: 1

tokens: 100

beam_width: 1

repetition_penalty: 1.0

"""

2. COLANG – The Language of Guardrails: COLANG blends natural language with Python to design conversational guardrails. Its syntax includes blocks, statements, expressions, keywords, and variables. Key block types are user message blocks (define user ...), flow blocks (define flow ...), and bot message blocks (define bot ...). Here's an example of COLANG configuration:

COLANG_CONFIG = """

# define greetings

define user express greeting

"hello"

"hi"

"what's up?"

define flow greeting

user express greeting

bot express greeting

bot ask how are you

define user ask off-topic

"Can you tell me some good tourist places to visit around Mysore

"Can you recommend a good restaurant nearby?"

"What are the main highlights of the president's speech?"

"How do I cook spaghetti?"

define bot explain cant off topic

"I cannot answer your question because I'm programmed to assist only with questions related to products on this site."

define flow off-topic

user ask off topic

bot explain cant off topic

"""

With the configurations in place, setting up NeMo Guardrails is straightforward:

from nemoguardrails import LLMRails, RailsConfig

# initialize rails config

config = RailsConfig.from_content(

yaml_content=YAML_CONFIG,

colang_content=COLANG_CONFIG

)

# create rails

rails = LLMRails(config)

Let's put our setup to the test with a simple greeting:

res = await rails.generate_async(prompt="Hey there!")

print(res)Output:

Hi! How can I help you today?

How are you doing today?

Here, the response is guided by the greeting flow block in Colang. Now, let's test the guardrail for off-topic inputs:

res = await rails.generate_async(prompt="How do I cook spaghetti?")

print(res)Output:

I cannot answer your question because I'm programmed to assist only with questions related to products on this site. Is there anything else I can help you with?Indeed, NeMo Guardrails proficiently managed the conversation, adhering to the defined parameters. With its comprehensive and flexible system, NeMo Guardrails is a robust solution for safely and responsibly managing LLM outputs. Its async-first design and compatibility with multiple LLMs make it an attractive tool for a broad spectrum of AI developers and applications.

Custom-Designed Tipz AI Guardrails: Simplifying Precision and Control

For businesses seeking to proactively navigate the complexities of AI with precision, Tipz AI provides customisable guardrails tailored to meet unique ethical, regulatory, and business requirements, ensuring AI interactions are not only precise but also preemptively safe and compliant. Custom-designed guardrails, such as those provided by Tipz AI, offer unparalleled granularity in control, allowing for AI interactions that are meticulously aligned with specific business goals, ethical mandates, and compliance landscapes. This state-of-the-art product integrates seamlessly with a wide array of AI systems, extending far beyond the scope of Large Language Models (LLMs), to include chatbots, recommendation engines, and automated content creation tools. Its core features and capabilities are meticulously designed to cater to the evolving needs of businesses and organizations aiming to deploy AI responsibly.

Unique Features of Tipz AI Guardrails:

Tipz AI Guardrails are designed to act as a proactive ethical compass for AI technologies, embedding safety, fairness, and compliance into the fabric of digital interactions.

- Custom Design Capabilities: Tipz AI Guardrails stands out for its highly customizable framework, which allows developers to tailor its features according to specific business needs and objectives. Whether it's adjusting the sensitivity of content filters or defining custom ethical guidelines, the system offers unparalleled flexibility.

- Compatibility Beyond LLMs: Unlike many guardrail solutions that focus solely on LLMs, Tipz AI Guardrails is compatible with a broader spectrum of AI technologies. This inclusivity ensures that businesses can apply a consistent set of ethical and compliance standards across all their AI deployments, regardless of the underlying technology.

- Advanced Analysis Features:

- Proactive Sentiment Alignment: Through advanced sentiment analysis, Tipz AI Guardrails proactively ensures that AI-generated content upholds the desired brand ethos, emotionally intelligent communication, and alignment with audience expectations.

- Bias Detection: Leveraging cutting-edge AI to identify and mitigate bias in AI outputs, the tool ensures that content is fair, equitable, and free from discriminatory undertones.

- Age-Appropriate Content Rating: With its sophisticated content analysis capabilities, Tipz AI Guardrails can automatically assess and rate content for age appropriateness, ensuring that AI applications are safe and suitable for intended audiences.

- Alignment with Business Objectives and Compliance Requirements: The system proactively ensures compliance and ethical integrity, aligning AI outputs with business objectives and preemptively navigating the complex landscape of global regulations. This feature is crucial for organisations aiming to navigate the complex landscape of international data protection and privacy laws.

At its core, Tipz AI Guardrails is powered by the latest advancements in transformer models, which are renowned for their ability to understand and generate human-like text. This technological foundation enables the system to analyze AI outputs with remarkable accuracy and depth. Additionally, its API facilitates seamless integration and customization, allowing developers to fine-tune the system's behavior to meet specific requirements or integrate with existing workflows.

Tipz AI Guardrails empower businesses to proactively define and enforce ethical and legal boundaries for AI, significantly mitigating risks and elevating trust among customers and stakeholders through transparent and responsible AI use. Its comprehensive features, coupled with the ability to be tailored to specific needs, make it an indispensable asset for any business looking to navigate the complexities of AI deployment with confidence and integrity.

Final words: Spectrum of AI Guardrails

While NeMo Guardrails stands out for its versatility and comprehensive toolkit, adaptable to a wide range of LLMs, it is not the only player in the field. The market is ripe with both open-source and proprietary solutions, each offering unique features and capabilities in AI safety. Guardrail.ai, for instance, is an open-source framework that distinguishes itself by focusing on defining and enforcing assurance for LLM applications. It facilitates the creation of custom validations at the application level, orchestrating the processes of prompting, verification, and re-prompting. This ensures that LLM outputs align with specific application needs. The platform also boasts a library of commonly used validators and a specification language for effectively communicating requirements to LLMs.

On the proprietary side, Credo's AI Guardrail emerges as a comprehensive control centre for the responsible adoption of generative AI. It places a strong emphasis on continuous monitoring and governance to ensure AI systems adhere to ethical standards and legal regulations. Its customizable control features allow organisations to set their own AI behaviour standards, aligning with specific ethical and operational guidelines. The user-friendly interface and adaptability to various AI models make Credo's AI Guardrail a versatile and accessible tool for a wide range of industries.

In the diverse landscape of AI safety solutions, Tipz AI Guardrails emerges as a beacon of innovation, offering bespoke guardrails that not only ensure the ethical deployment of AI but also underline the transformative potential of customization in securing AI applications against the myriad challenges of tomorrow.

Together, these tools and approaches highlight the diverse approaches in the field of AI safety. As the AI landscape continues to evolve, these tools and strategies play a pivotal role in steering the technology towards a future that prioritises responsible innovation, underlining the importance of ethical considerations and societal impact in the advancement of AI.

Contributors:

Amita Kapoor: Author, Research & Code Development

Narotam Singh: Copy Editor, Design & Digital Management